|

While revisiting this experiment, I found the quality of results are greatly improved by removing the BTService_SenseContextOverride and creating new Tasks instead. The "Act" functionality is elegantly replaced by StableCamera and PhysicsCamera nodes that also avoid the unwanted jitter from the previous implementation.

4 Comments

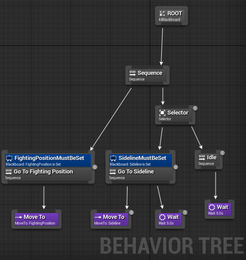

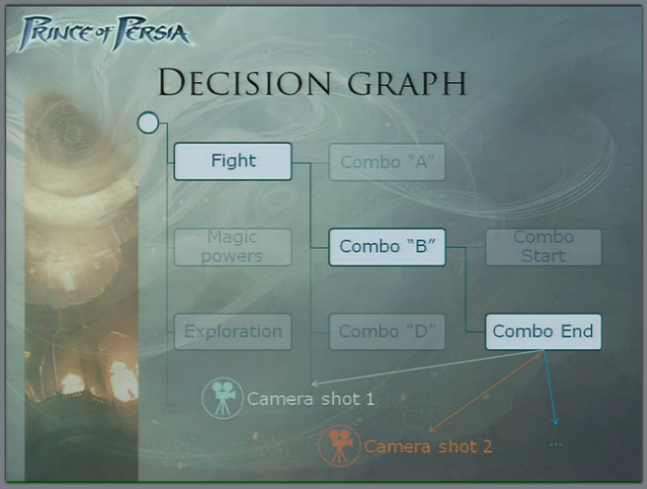

A simple behaviour tree from my Archery Tag AI A simple behaviour tree from my Archery Tag AI The first question I must address here: Why would anyone put a behaviour* tree on a camera? Behaviour trees are a powerful tool that allows designers and artists to visualize logic flow. It is easier for a non-technical person to learn and interact with a visual system that describes the states, than one where they must infer the states. The quick wins of behaviour trees over Blueprint include less rules to understand, clearer visual connections and flow, and nodes can be renamed to disambiguate them from other nodes (all of which help first-time users too). The video above is part of a playlist showing how to implement behaviour trees in Unreal, and is recommended viewing for anyone who wants to have a practical understanding of the features tested in this experiment. Note that we implement very basic triggers with behaviour trees in this experiment, however, these triggers are simple examples and might be better off implemented at the C++ level. Ideally, the process used in this experiment would be applied to empowering camera artists through control of camera behaviours in cases where performance costs are considered less important than giving artists a greater degree of autonomy. This experiment begins where Real Time Cameras in Unreal Editor 4 - Part 12 ends. Also in that article, I mentioned a GDC talk where Jonathan Bard from Ubisoft explains why they implemented a system like this for Prince of Persia. In that game, camera artists were given control of " thinking" for the camera using behaviour trees but camera senses and actions were implemented by engineers. My use of Unreal's Behavior Tree mimics the Decision Graph employed by camera artists for triggering behaviours, but I applied Blueprint for engineering tasks like scripting the camera.

*The answer to the second question is yes, I will insist on spelling behaviour in the Canadian/UK fashion whenever possible. I'll keep Unreal's spelling for their tools. Video: Remi Lacoste. GDC 2013."...Emotionally Engaged Camera... " https://archive.org/details/GDC2013Lacoste

Reference: Mark Haigh-Hutchinson. 2009. "Real-Time Cameras: A Guide for Game Designers and Developers." Elsevier. As we come closer to completing this series of articles, I have a varied assortment of guidelines from Haigh-Hutchinson that I have not shared yet. Many of these guidelines are echoed in Remi Lacoste's GDC Talk on the camera for Tomb Raider 2013, which is recommended viewing for anyone interesting in camera or conveying emotion in video games. His powerpoint for that talk highlights a key difference between the camera I made in Part 10 and the camera it was meant to emulate: "Instead of using an animated layer playing on top of our cameras, we used a physics based camera shake system allowing us to embrace a more custom approach." My Timeline-based implementation served as a good way to experiment with timelines, but in this post I recreate those camera behaviours using physics instead. I found Timelines useful in demonstration of my intention in Hugline Miami too, but ultimately physics provides better implementation when collision is involved. Today's grab-bag of wisdom happens to include three guidelines about camera collision.

Reference: Mark Haigh-Hutchinson. 2009. "Real-Time Cameras: A Guide for Game Designers and Developers." Elsevier.

So far, my focus has been on third-person cameras. I will share some first person camera wisdom from the reference textbook in this post, and also give third person camera examples to contrast between tho two camera schemes. These examples will demonstrate the following three points as they pertain to both first person and third person:

|

James Dodge

Level Designer Categories

All

Archives

October 2021

|

RSS Feed

RSS Feed