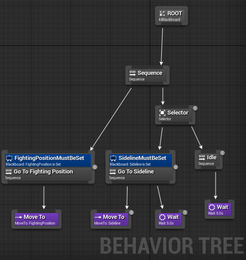

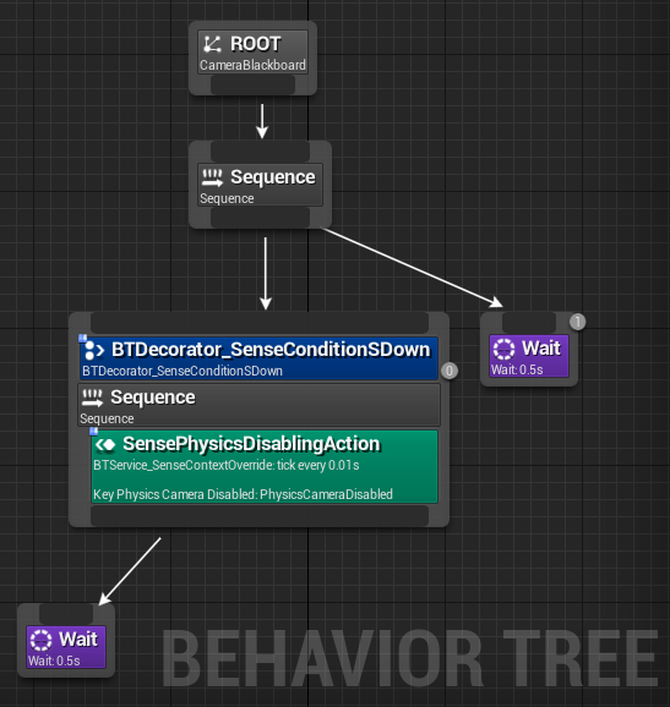

A simple behaviour tree from my Archery Tag AI

A simple behaviour tree from my Archery Tag AI Behaviour trees are a powerful tool that allows designers and artists to visualize logic flow. It is easier for a non-technical person to learn and interact with a visual system that describes the states, than one where they must infer the states. The quick wins of behaviour trees over Blueprint include less rules to understand, clearer visual connections and flow, and nodes can be renamed to disambiguate them from other nodes (all of which help first-time users too).

The video above is part of a playlist showing how to implement behaviour trees in Unreal, and is recommended viewing for anyone who wants to have a practical understanding of the features tested in this experiment. Note that we implement very basic triggers with behaviour trees in this experiment, however, these triggers are simple examples and might be better off implemented at the C++ level. Ideally, the process used in this experiment would be applied to empowering camera artists through control of camera behaviours in cases where performance costs are considered less important than giving artists a greater degree of autonomy.

*The answer to the second question is yes, I will insist on spelling behaviour in the Canadian/UK fashion whenever possible. I'll keep Unreal's spelling for their tools.

Experiment: Ease of use and Visual feedback

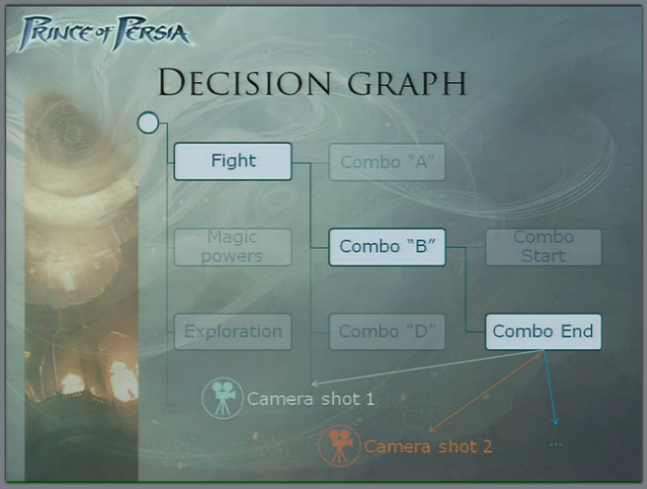

The subject (me) will create a behaviour tree that swaps the default camera for another camera when a button on the keyboard is held down. They will start with all of the "Sense" and "Act" functionality created ahead of time, and must apply them using only the Behavior Tree window to create the "Think" functionality that decides what behaviours the gameplay camera should be doing in specific conditions.

Use case: the camera artist wants the default shake camera replaced with a camera locked on the player's origin when the player holds the 'S' key for a soft landing.

1. How fast can this be done by a first-time implementer? The longer the time, the less ease of use. We are ignoring that the subject is the one who implemented the system, because they have not played with Unreal's Behavior Trees in the last year.

2. Is any special knowledge required to implement the use case? The "Sense" and "Act" functionality for this behaviour tree have already been scripted, and the names of the required actions are provided. All extra information required and actions taken will be listed, with each item indicating reduced ease of use for this workflow.

3. What is the minimum number of clicks required to create the behaviour tree? Clearly, a higher number of clicks is inversely proportional to the ease of use.

4. Can the subject complete the test without using anything other than official Unreal Engine 4 support documentation? If the test cannot be completed with these restrictions then it indicates low ease of use. All helpful links will be documented.

What visual feedback is provided?

All of the feedback during authoring and debugging will be provided to show the benefits of this approach for the end user. In addition, the quality will be assessed.

Results: Ease of use

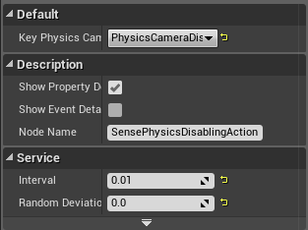

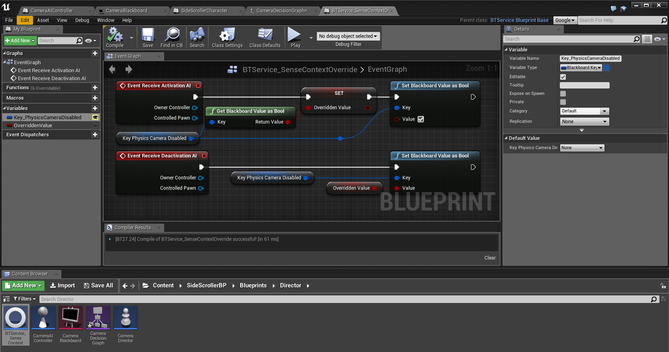

Key_PhysicsCameraDisabled must be initialized here

Key_PhysicsCameraDisabled must be initialized here 2. There were a few general Behaviour Tree implementation rules that I had to relearn in order to get a successful result, including:

- The user must right click on the nodes to add decorators or services to nodes

- Services (for setting Blackboard values) can only be added to composite nodes

- Services have properties that must be initialized from the default window

- Sequences halt when a child's condition check returns false. They are more useful for this test than selectors, which halt when condition checks returns true.

4. I did not use any references to complete the test but here is the official guide:

https://docs.unrealengine.com/latest/INT/Engine/AI/BehaviorTrees/index.html

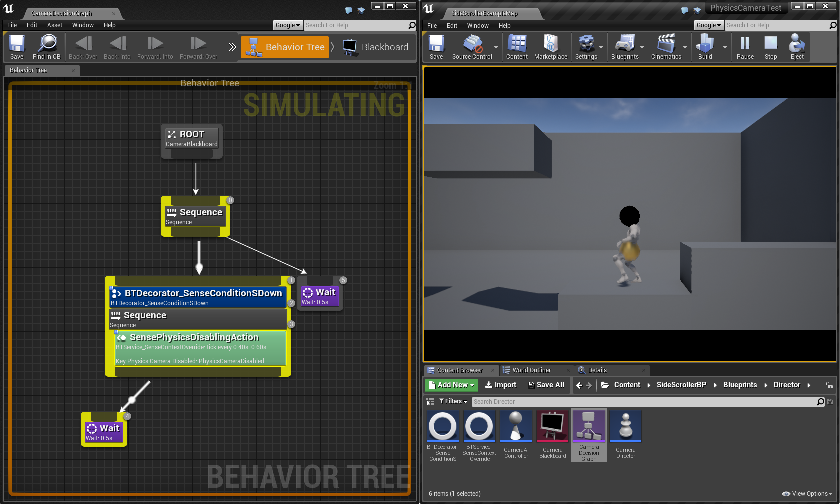

Results: Visual feedback

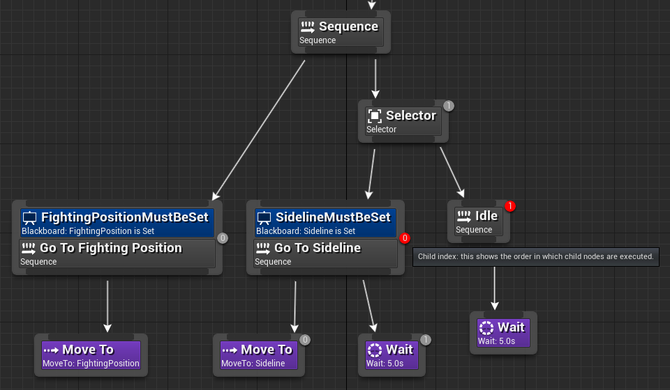

Authoring feedback includes highlighting functional areas when the mouse hovers over each node. This is quite helpful to show the user where they can click and drag to create a new connection. Dragging and hovering over an invalid connection informs the user why the node cannot be connected. As seen in Blueprint, dragging to an empty space creates a new node. There are less node options available in a Behavior Tree in comparison to Blueprint, making it less intimidating for new users.

Right clicking allows the user to add services or decorators to an existing node, but this is one of the less user-friendly features because the information is hidden until they right click on a node. Also, they must search through a list of up to ten actions to find the one they want to perform.

When a composite node has multiple children, a grey numbered circle appears indicating the order in which the children will be evaluated. Hovering over the numbered circle turns all that nodes siblings red.

During the test, I found this particularly useful when the camera was jumping between two states rapidly, which could have been caused by errors in the Blueprint controlling the movement of the camera. However, I could see that the states were flickering rapidly too while running the game with the Behavior Tree open. I was able to discover at a glance that the issue causing the flickering was in the "Think" rather than the "Act" functionality.

Visual quality of results:

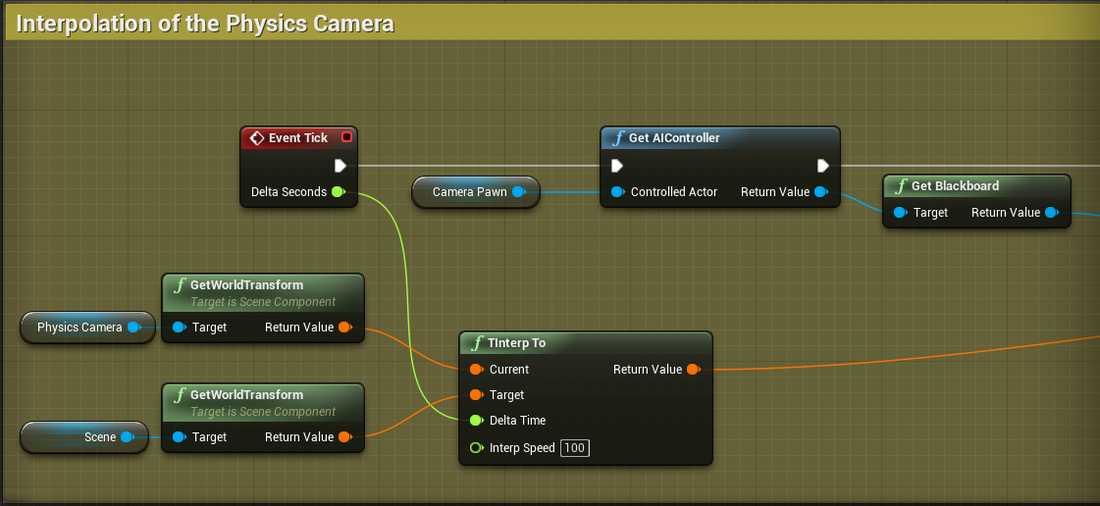

The final product of this test has some inadequacies including noticeable and abrupt transitions between cameras, a 0.5 second cooldown interval after holding for 0.5 seconds, and the lack of communication between character and camera states. The "Think" functionality was actually easier to implement in Blueprint alone, although it required more nodes and a more in-depth understanding of how to get references from other scripts. Overall, the results demonstrate a modest advantage which I believe will be improved after iterating on the methods and implementation.

Conclusion: Why not use Blueprint only?

Blueprints are a powerful tool for empowering content creators in Unreal Editor 4, but developers should search for and be aware of areas like camera behaviours where other approaches may be more accessible, provide better feedback, and promote developers to create implementations that others can readily comprehend.

Bonus Content:

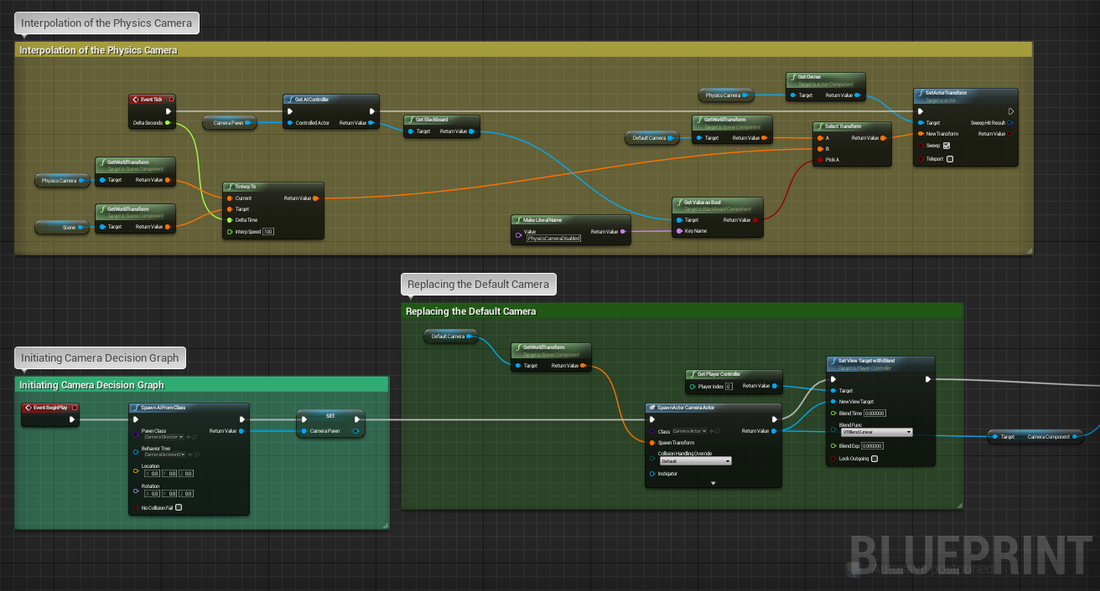

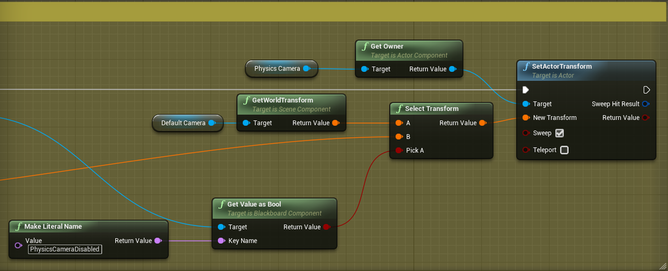

Method: Blueprint to support my behaviour tree

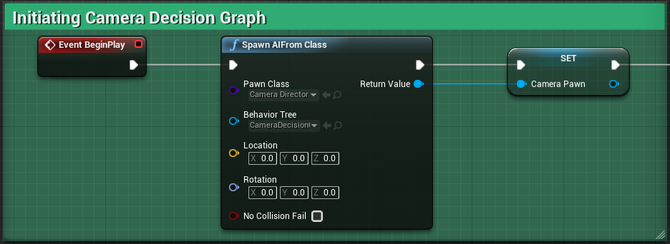

Newly added Blackboard and Behavior Tree

Newly added Blackboard and Behavior Tree 2a. Our Side Scroller Character should run Camera Decision Graph with Event BeginPlay activating RunBehaviorTree (failed approach). Compiler Result: RunBehaviorTree's Target property can only reference an object of type "AI Controller" which means I have to try a new approach. Now I know a Camera Director object of type Pawn is required.

A new folder containing AI Controller, Pawn, and other AI objects.

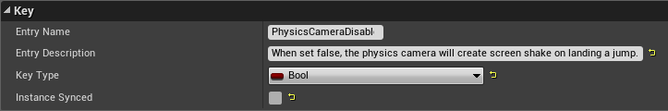

A new folder containing AI Controller, Pawn, and other AI objects. 3a. Create a Boolean key in Camera Blackboard to switch between cameras.

3b. Set Camera Director's default AI Controller to the intended one (missed step).

3c. Set Camera Decision Graph's Blackboard to Camera Blackboard (missed step).

4a. Initialize the PhysicsCameraEnabled key from the Side Scroller Character with SetValueAsBool for Camera AI Controller on Event BeginPlay (failed approach)

Runtime result: SetValueAsBool couldn't access Blackboard, variable out of scope. Also I found a typo in a MakeLiteralName, causing the node to always return false.

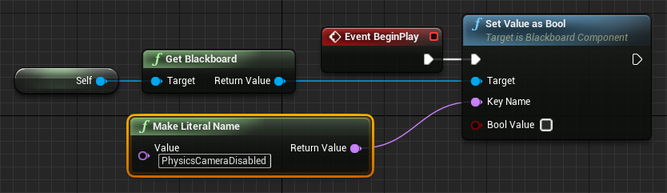

4b. Initialize the PhysicsCameraDisabled key from the Camera AI Controller with SetValueAsBool on Event BeginPlay. Requires steps 3b and 3c.

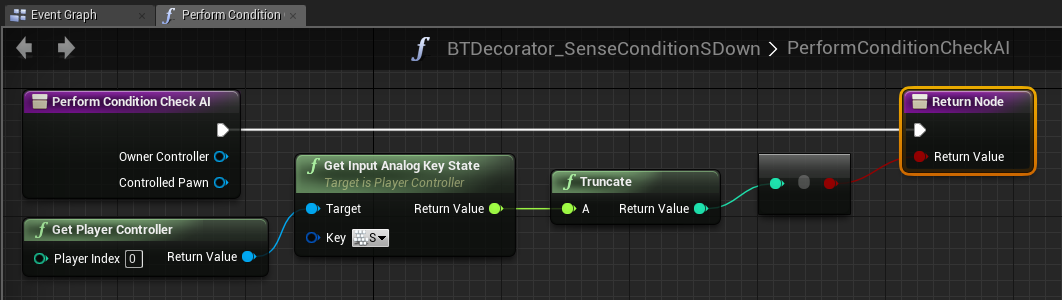

7. Create "Sense" functionality as a Decorator to be used on Behavior Tree nodes, overriding its inherited PerformConditionCheckAI function to return useful values.

Note: We are using a key press as a proxy for less simple changes in game states.

Caveat: There are undoubtedly better ways to implement "sensing, thinking, and acting" on cameras in Unreal Engine 4, and I will continue to post improvements to the methods and implementations in this test as Camera Experiment 1B, 1C, etc.

RSS Feed

RSS Feed